Cost-Saving magic of Data Processing Automation

Processing large amounts of information accurately and efficiently is crucial. This data processing requires huge compute power which can be very expensive. This blog explores a sophisticated automated approach designed to handle daily data processing, ensuring a seamless and reliable flow from start to finish. Requiring minimal human intervention and scaling resources on demand, this is most cost efficient approach for your needs.

The Power of Automation

Our automated daily processing pipeline tackles significant data volumes with minimal manual intervention. This solution integrates several key components:

- Scalable Processing Units: These units dynamically start and stop to process data efficiently which helps to reduce cloud system cost.

- Managed Database System: Ensures resources are readily available when needed and scale on-demand.

- Kubernetes pod start/stop: Ensures Kubernetes resources start/stop and are available when needed to process data.

- Secure File Transfer: Securely handles file uploads and downloads.

- Data Verification: Ensures data integrity by verifying file checksums.

- Automated Checks and Notifications: Validates data and informs stakeholders of processing statuses.

- Compliance and Cleanup: Maintains data security and regulatory adherence by cleaning up files promptly.

This comprehensive approach guarantees accurate and efficient data processing while upholding high data security standards.

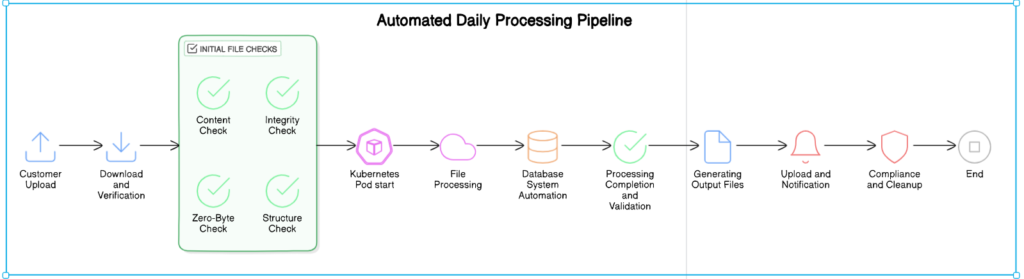

The Processing Journey

- Periodic File Check: Our system periodically checks for new file uploads on our secure server. This initiates the automated processing pipeline whenever new files are found, ensuring timely data processing.

- Verification: verifies the compressed file’s integrity using the verification file. This ensures the file hasn’t been corrupted during upload.

- Initial File Checks: Once verified, the compressed file undergoes a series of checks:

- Content Check: The file must contain at least one data file (e.g., CSV).

- Integrity Check: Ensures the file is complete and not corrupted.

- Zero-Byte Check: No data files should be empty.

- Structure Check: Each data file is checked for proper data If Any issues trigger notifications to relevant parties.

- File Processing: Upon passing the checks, the pipeline launches processing units to handle the data. These units automatically scale up or down as needed, optimizing resource usage.

- Database System Automation: The database system also scales dynamically, ensuring resources are available during processing and freed up afterward, optimizing performance and cost.

- Processing Completion and Validation: After processing, the system performs checks on the generated output files to verify their correctness and completeness.

- Generating Output Files: Following successful validation, the system creates a compressed output file containing the processed data files and a verification file.

- Upload and Notification: The final step involves uploading the output file and verification file to the customer’s designated location. An email notification is sent, informing them that the processed files are ready for download.

- Compliance and Cleanup: To maintain compliance, all uploaded files are automatically deleted within a set timeframe, ensuring data security and privacy. Additionally, the system cleans up temporary resources used during processing, optimizing system performance.

Conclusion

Our automated daily processing pipeline empowers our customers to receive accurate and timely data without manual intervention. This robust system exemplifies our commitment to delivering best-in-class data management solutions. The ability to scale on demand and dynamically resize database systems is a significant cost-saving aspect, reducing the need to run systems sized for peak load continuously.

We continuously strive to innovate and enhance our services to meet the ever-evolving needs of our customers. Stay tuned for more updates on our cutting-edge solutions and how we’re making data management smarter and more efficient!

Stay tuned for more updates on our cutting-edge solutions and how we’re making data management smarter and more efficient!